Rust Development for the Raspberry Pi on Apple Silicon

Contents

Update (18.02.2023): Updated the article to reflect the usage of VSCode remote development instead of JetBrains Gateway

A few weeks ago I started building a Rust project for the Raspberry PI using my brand new MacBook Pro with an M1 chip (the old MacBook Pro from late 2013 still works but it simply is too slow for the work I’m doing these days).

As it turns out, this isn’t as trivial as I thought it would be. Here’s what I learned.

Attempt 1: developing on Mac OS, building on the Raspberry PI

This approach is kind of guaranteed to work, but it’s slow. It also requires to synchronize the source files from the development machine to the PI at every change which is a tedious process by itself.

Attempt 2: developing and building directly on MacOS

Rust supports cross-compilation.

This approach works fine so long as the project doesn’t use any libraries with bindings to libraries written for linux, such as the rpi_ws281x library:

|

|

Attempt 3: developing and building remotely on a linux machine with Cargo

Since I have a few linux machines lying around, I decided to use one of these to build the executable and deploying it to the Raspberry PI. I found a thorough article describing the Cargo setup needed in order to get the cross-compilation to work.

In order to continue development on the Mac (well, sort of) I used Jetbrains Gatway which mostly works.

With this approach, the project did compile… but running the executable on the Raspberry PI failed. The GLIBC version of Raspbian OS was too old (compared to the one of the linux machine).

Attempt 4: developing and building remotely on a linux machine with Cross

After a bit of searching, I came across Cross (pun not intended). Cross is a Rust tool for “Zero setup” cross compilation and “cross testing” of Rust crates. It spins up a container in order to build the crate. It has the same CLI as Cargo which makes it very easy to start using it.

Full of hope, I installed it, built the project, deployed the executable and ran straight into the next issue.

|

|

It took me a while to puzzle out this one. Trying to use features = ["dynamic"] in the build configuration (as well as other tweaks suggested in this scenario) didn’t get me much further and it is only many attempts later that I realized that the C languge library on Raspbian OS is really quite old.

Attempt 5: developing and building remotely on a linux machine with Cross and Ubuntu on the Raspberry PI

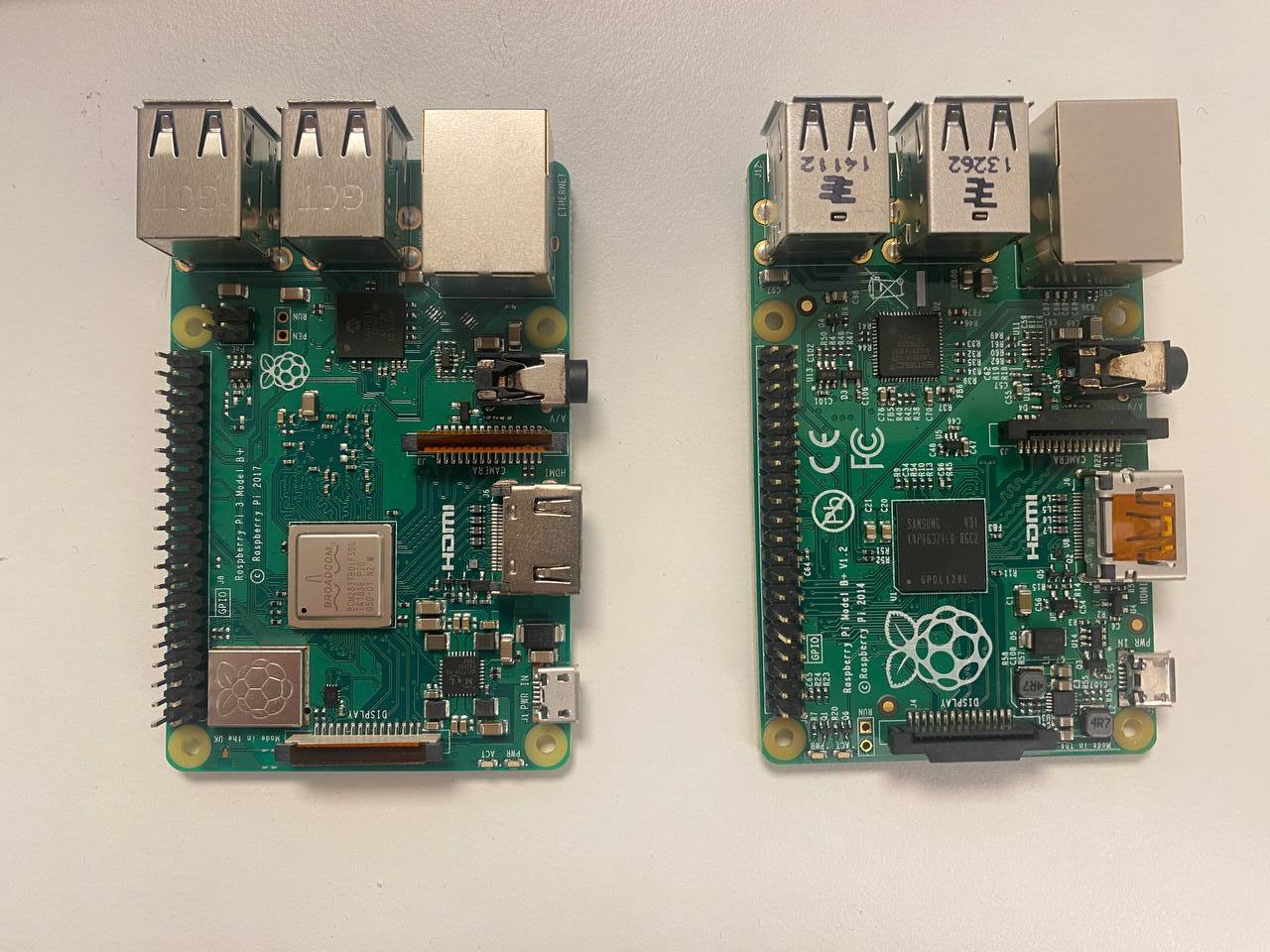

I finally resolved to installing an up-to-date OS on the Pi, using Ubuntu. It took me yet more time to realize that the Raspberry Pi I was using was not a 3 B+ (as all the others I had lying around) but an elderly Pi 1 B+ which explained why the Pi wouldn’t start at all. That’s what you get for having too many Pi scattered across your office.

This finally did the trick in regard to getting the executable to run, but I was still stuck with a linux desktop machine with a much too noisy fan.

Attempt 6: developing and building on MacOS with Cross

Since I discovered Cross which should also work on MacOS I attempted to scale back the setup and build directly on the Mac.

This works well for building - the only annoyance comes from Docker for Mac complaining about running a Linux image:

|

|

There’s apparently a way to fix this, however I didn’t manage to get rid of the error message.

While this approach works, I find that Docker on the Mac to be quite slow and noisy:

|

|

While this approach works in regard to the build, it fails for the development part on the Mac. Building the project locally on the mac (in order to get IDE support) fails as some crates for linux flat out refuse to build on the Mac:

|

|

On we go.

Attempt 7: developing and building semi-remotely on the Mac with a virtual machine

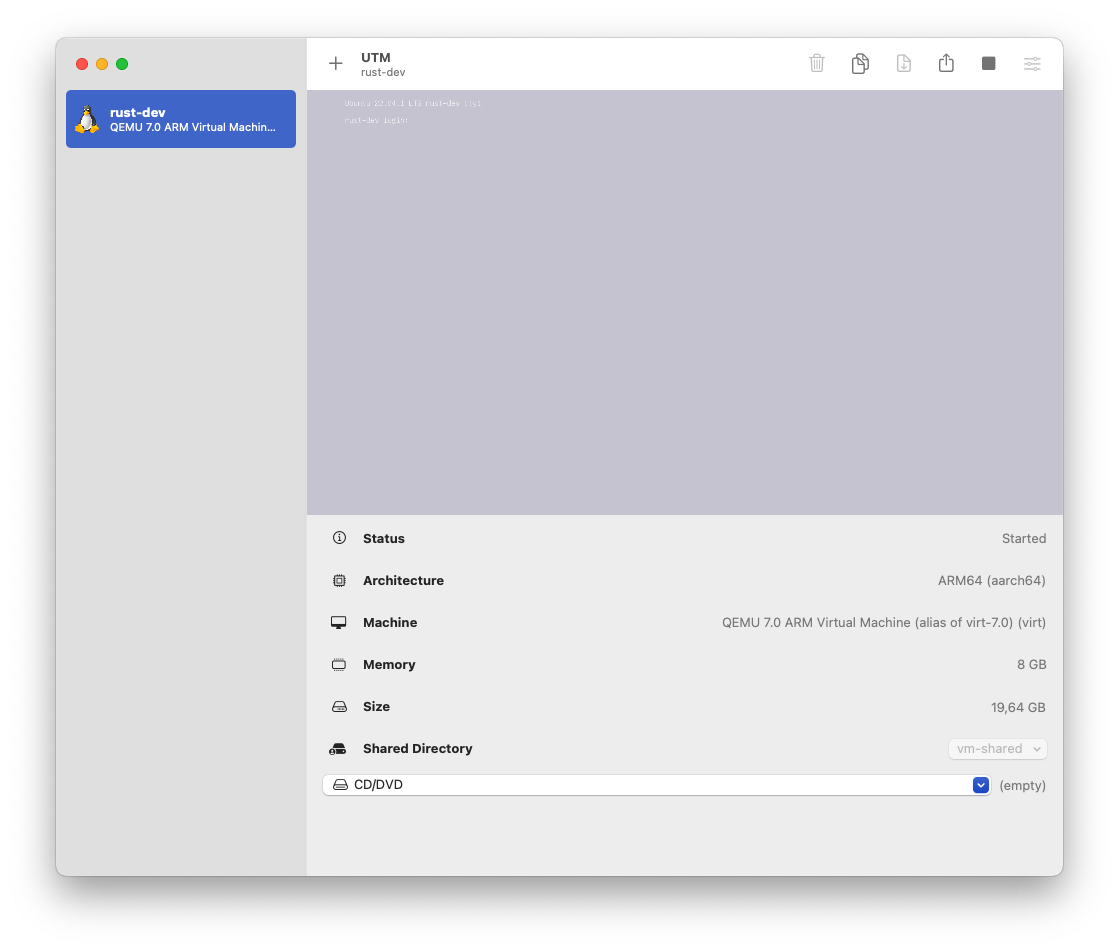

UTM allows to use Apple’s Hypervisor virtualization framework to run ARM64 operating systems on Apple Silicon at near native speeds.

I went on to install UTM and create a virtual machine with the ARM version of Ubuntu Server (see this article for a detailed walk-through).

With this approach, it is possible to “locally” develop using JetBrains Gateway against the virtual machine and have full IDE support and local builds that work.

What doesn’t work is the cross-compilation using cross because it doesn’t support aarch64/arm64 hosts yet as outlined in this issue

Attempt 8: developing semi-remotely on the Mac with a virtual machine, building on the Mac with OS X using file sharing

Since we can develop inside of the VM and build on the Mac directly using cross, let’s combine both approaches and find a way to share the files.

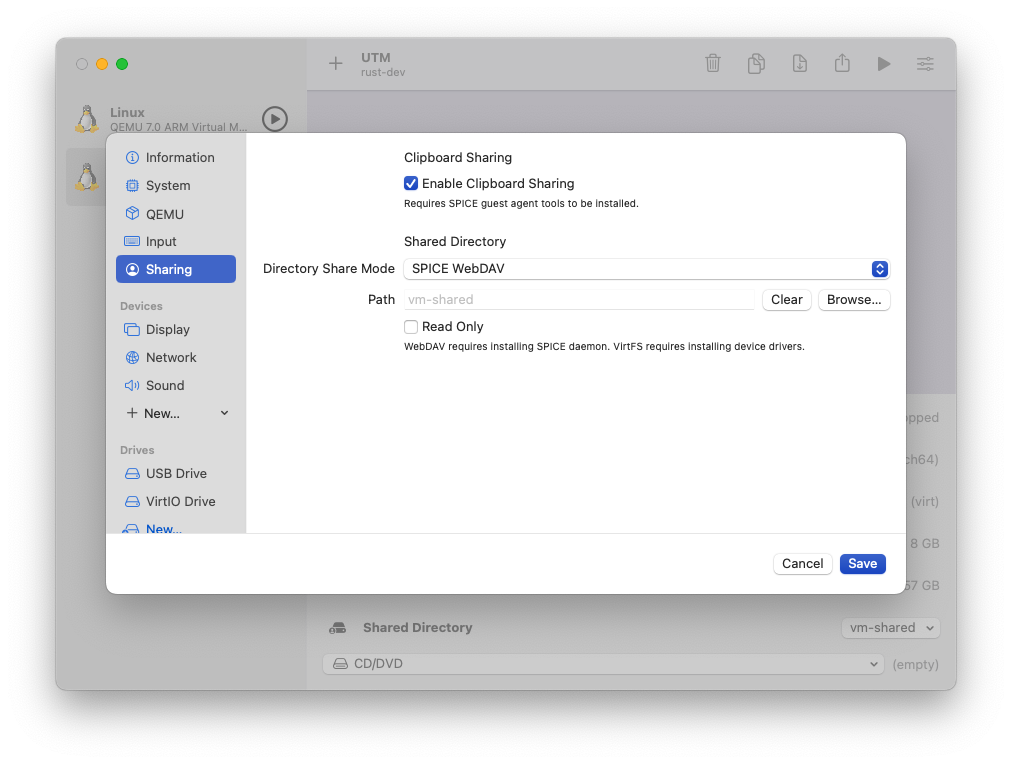

As it turns out, UTM / QEMU supports sharing folders between the host and guest.

With SPICE WebDAV

Installing SPICE WebDAV on the Ubuntu Server guest and mounting a directory can be achieved like so:

|

|

This also requires to configure the virtual machine in UTM to use this folder sharing mode for it to work:

However, running cargo on this shared directory… fails (some files randomly cannot be written and WebDAV is slow with a linux guest).

With VirtFS

In order to use VirtFS, it is necessary to first enable the necessary kernel modules and to restart the VM.

The directory can then be mounted like so:

|

|

Cargo runs much faster with this approach but unfortunately, it can’t seem to run reliably either using this approach:

|

|

This was a bug in QEMU which is fixed now. UTM doesn’t appear to be using the latest version of QEMU yet.

Attempt 9: same as above, but with rsync

I finally resorted to using rsync in order to synchronize the files from the development machine before each build. This does, so far, conclude my journey to having an efficient workflow for developing in rust for the Raspberry PI from a MacBook Pro with Apple Silicon. Unfortunately, Jetbrains Gateway is rather unstable, even when connecting to a local VM.

Attempt 10: VSCode and remote development

I ended up switching to VSCode for remote development over SSH in order to connect to the local UTM virtual machine. This is much more stable than JetBrains Gateway and Rust support is good.

In summary

- get UTM and install Ubuntu Server for ARM

- use VSCode to develop on the Mac, using the linux VM as backend

- make sure to use Ubuntu Server for the Raspberry PI (or something else) and not Raspbian which has a too old C library

- use Cross on the Mac to build the project for the Raspberry PI

- use rsync in order to keep the project files between the host and the VM in sync